Example implementations of RNN, LSTM, and GRU using PyTorch:

import torch

import torch.nn as nn

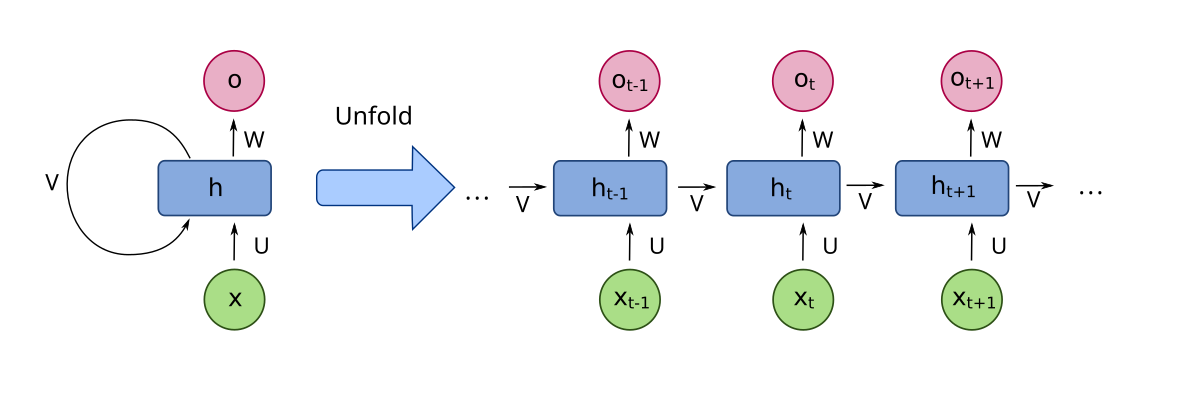

class BasicRNNCell(nn.Module):

def __init__(self, input_size, hidden_size):

super(BasicRNNCell, self).__init__()

self.ih = nn.Linear(input_size, hidden_size)

self.hh = nn.Linear(hidden_size, hidden_size)

def forward(self, x, h):

h = torch.tanh(self.ih(x) + self.hh(h))

return h

class CustomLSTMCell(nn.Module):

def __init__(self, input_size, hidden_size):

super(CustomLSTMCell, self).__init__()

self.W_ii = nn.Linear(input_size, hidden_size)

self.W_hi = nn.Linear(hidden_size, hidden_size)

self.W_if = nn.Linear(input_size, hidden_size)

self.W_hf = nn.Linear(hidden_size, hidden_size)

self.W_io = nn.Linear(input_size, hidden_size)

self.W_ho = nn.Linear(hidden_size, hidden_size)

self.W_ig = nn.Linear(input_size, hidden_size)

self.W_hg = nn.Linear(hidden_size, hidden_size)

def forward(self, x, h, c):

i = torch.sigmoid(self.W_ii(x) + self.W_hi(h))

f = torch.sigmoid(self.W_if(x) + self.W_hf(h))

o = torch.sigmoid(self.W_io(x) + self.W_ho(h))

g = torch.tanh(self.W_ig(x) + self.W_hg(h))

c = f * c + i * g

h = o * torch.tanh(c)

return h, c

class SequenceModel(nn.Module):

def __init__(self, input_size, hidden_size, num_layers, cell_type='lstm', bidirectional=False, dropout=0.0):

super(SequenceModel, self).__init__()

self.cell_type = cell_type.lower()

if self.cell_type == 'rnn':

self.rnn = nn.RNN(input_size, hidden_size, num_layers, bidirectional=bidirectional, dropout=dropout)

elif self.cell_type == 'lstm':

self.rnn = nn.LSTM(input_size, hidden_size, num_layers, bidirectional=bidirectional, dropout=dropout)

elif self.cell_type == 'gru':

self.rnn = nn.GRU(input_size, hidden_size, num_layers, bidirectional=bidirectional, dropout=dropout)

else:

raise ValueError(f"Unknown cell type: {cell_type}")

def forward(self, x, h=None):

return self.rnn(x, h)